In a previous post, I wrote about the beginnings of a Victron System Dashboard. Most of that is still in use; however, I’ve made significant improvements to the data collection that warrants an update.

Shortly after putting the old system into operation, I began to have issues with connectivity. Prior to that project, I had replaced the original Victron Nano Wi-Fi module with a StarTech USB300WN2X2D from their list of supported adapters due to signal strength issues, but that too started having issues. Somehow, the increased network traffic from the data collection started triggering network adapter disconnects.

Hardware Debugging & Improvements

The initial couple of disconnects I treated as flukes. I unplugged the Wi-Fi dongle, plugged it back in, and it reappeared in the Victron Color Control GX interface. Problem was, it kept recurring. As soon as the interface had transmitted more than a small amount of traffic, it would drop the connection and, more often than not, require a physical disconnection of the dongle to resolve.

Thinking that the data volume may have been the issue, I started by scaling back the update frequency of MQTT feed, building in sleep delays to allow the network link some silent time. That didn’t work. Next, I investigated and wrote a ModbusTCP interface, which would have lower throughput still given its binary format. Still, it doesn’t solve it.

Having ruled out anything directly throughput related, I thought it possible that the power draw of the dongle on the CCGX might be causing the issue. To that end, I purchased a DC-powered USB 2.0 hub (I avoided USB 3.0 due to the interference it can cause in the 2.4 GHz frequency range) and wired it in. The hub had a jumper that can be set to prevent any power draw at all from the source hub. There was no improvement with it. It did allow me to incorporate the GPS dongle more easily, though, so it wasn’t a complete waste.

At this point I finally went through with enabling SSH on the CCGX and configuring it for local access to get at the logs. One line stood out:

Feb 27 05:16:16 ccgx user.err kernel: [181186.498748] hub 1-0:1.0: port 1 disabled by hub (EMI?), re-enabling...

It was in the middle of this sequence that appeared any time the network adapter was dropped:

Feb 27 05:16:16 ccgx user.err kernel: [181186.350860] usb 1-1.2.2: device descriptor read/8, error -71 Feb 27 05:16:16 ccgx user.err kernel: [181186.461364] hub 1-1.2:1.0: cannot disable port 2 (err = -71) Feb 27 05:16:16 ccgx user.err kernel: [181186.465820] hub 1-1.2:1.0: cannot reset port 2 (err = -71) Feb 27 05:16:16 ccgx user.err kernel: [181186.470092] hub 1-1.2:1.0: cannot reset port 2 (err = -71) Feb 27 05:16:16 ccgx user.err kernel: [181186.474334] hub 1-1.2:1.0: cannot reset port 2 (err = -71) Feb 27 05:16:16 ccgx user.err kernel: [181186.478637] hub 1-1.2:1.0: cannot reset port 2 (err = -71) Feb 27 05:16:16 ccgx user.err kernel: [181186.482849] hub 1-1.2:1.0: cannot reset port 2 (err = -71) Feb 27 05:16:16 ccgx user.err kernel: [181186.482879] hub 1-1.2:1.0: Cannot enable port 2. Maybe the USB cable is bad? Feb 27 05:16:16 ccgx user.err kernel: [181186.490081] hub 1-1.2:1.0: cannot disable port 2 (err = -71) Feb 27 05:16:16 ccgx user.err kernel: [181186.490173] hub 1-1.2:1.0: unable to enumerate USB device on port 2 Feb 27 05:16:16 ccgx user.err kernel: [181186.494354] hub 1-1.2:1.0: cannot disable port 2 (err = -71) Feb 27 05:16:16 ccgx user.err kernel: [181186.498596] hub 1-1.2:1.0: hub_port_status failed (err = -71) Feb 27 05:16:16 ccgx user.err kernel: [181186.498748] hub 1-0:1.0: port 1 disabled by hub (EMI?), re-enabling... Feb 27 05:16:16 ccgx user.info kernel: [181186.498779] usb 1-1: USB disconnect, device number 123 Feb 27 05:16:16 ccgx user.info kernel: [181186.498809] usb 1-1.2: USB disconnect, device number 124 Feb 27 05:16:16 ccgx user.info kernel: [181186.800964] usb 1-1: new high-speed USB device number 3 using ehci-omap Feb 27 05:16:16 ccgx user.info kernel: [181186.957794] usb 1-1: New USB device found, idVendor=0424, idProduct=2412 Feb 27 05:16:16 ccgx user.info kernel: [181186.957855] usb 1-1: New USB device strings: Mfr=0, Product=0, SerialNumber=0 Feb 27 05:16:16 ccgx user.info kernel: [181186.966705] hub 1-1:1.0: USB hub found Feb 27 05:16:16 ccgx user.info kernel: [181186.967773] hub 1-1:1.0: 2 ports detected Feb 27 05:16:16 ccgx user.info kernel: [181187.254211] usb 1-1.2: new high-speed USB device number 4 using ehci-omap Feb 27 05:16:17 ccgx user.info kernel: [181187.371673] usb 1-1.2: New USB device found, idVendor=0409, idProduct=005a Feb 27 05:16:17 ccgx user.info kernel: [181187.371734] usb 1-1.2: New USB device strings: Mfr=0, Product=0, SerialNumber=0 Feb 27 05:16:17 ccgx user.info kernel: [181187.380432] hub 1-1.2:1.0: USB hub found Feb 27 05:16:17 ccgx user.info kernel: [181187.381317] hub 1-1.2:1.0: 4 ports detected Feb 27 05:16:17 ccgx user.info kernel: [181187.668334] usb 1-1.2.2: new high-speed USB device number 5 using ehci-omap

The underlying Linux kernel was power cycling the internal USB hub after concluding that EMI (electromagnetic interference) was causing problems, and given this is installed next to a system that likely produces some, it seemed very plausible. To that end, I purchased ferrite beads for each of the USB cables to try and mitigate it. This also did not work.

By the time I reached this point, winter had fully struck, and it was a mixture of snow, cold temperatures, and wind for weeks. I decided to let it sit not recording while I planned the next approach: a separate, dedicated Wi-Fi bridge that connects to the ethernet port on the back of the CCGX and can be installed further away. Unfortunately, it’s not feasible to just run a continuous ethernet cable, or I would’ve done that instead.

I wired in another DC PoE injector of the same type I’ve used several other places in the trailer, and I connected it to a Ubiquiti NanoStation loco M2 configured to connect to the appropriate network. This finally worked.

Software Improvements

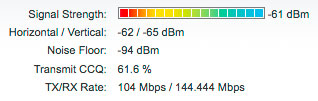

The NanoStation should provide a much more resilient connection than the USB dongles ever did. It’s meant for long-range Wi-Fi bridging and works well with other Ubiquiti hardware like mine is. Anecdotally, the USB dongles typically hovered around 60% signal strength while this is at maximum.

I kept the ModbusTCP implementation at the primary data ingester, preferring its lower overhead and other traits. It does have what is probably an accidental omission in regards to the GPS registers, though: the altitude is not retrievable. For that value, I’m stuck using MQTT.

ModbusTCP and MQTT differ slightly in their means of use, which is worth noting. ModbusTCP is a polling-based protocol where MQTT is notification-based. For literal readings, MQTT may often be the better option because it only notifies on changes, but I preferred the ModbusTCP route because it’s a regular update interval, which makes some of the custom calculations I do easier (e.g., net solar yield with higher precision than is provided by (com.victronenergy.solarcharger) /History/Daily/0/Yield).

As part of this, I’ve also refactored a bit of the code after coming up with better ways to ingest the data. I plan to clean it up a bit and then post it.